Eye, Robot

No, they’re not taking over the world. But robots are taking lots of pictures, helping us do farming more precisely.

No, they’re not taking over the world. But robots are taking lots of pictures, helping us do farming more precisely.

She roves through the muddy fields, head turning to study the swaying heads of wheat, occasionally craning her neck to see along the canopy. Meanwhile, she makes sure to collect information from the air about the conditions in each particular piece of the plot of land she’s been assigned to research.

Her name is Sheila and she’s helping to bring agriculture up to speed, monitoring how crops develop from when the seeds have first been sown through to when they become ripe for harvest.

Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don’t think AI will transform in the next several years.

Sheila doesn’t work alone. Rows of CropQuant devices are at work in the fields year-round, taking images and measurements as guided by the software to link crop growth to environmental conditions throughout the growth season, including conditions in the soil.

The CropQuant system is meant to be affordable and resilient. As such, the Ji Zhou Group which developed the system has used the Raspberry Pi computer as a small, cheap and low power device.

The Raspberry Pi runs algorithms that the team has linked up to various digital sensors in order to take environmental measurements at regular intervals. The static devices, along with Sheila, contain a powerful camera which takes regular, high definition photographs of growing crops.

The images and environmental data are sorted centrally via EI’s supercomputing cluster, where computer vision algorithms process images which are categorised for things such as greenness, height and growth rate.

This information is then linked to the environmental information, and the team’s algorithms produce predictive models from the data that can inform breeding practise and guide future experiments.

Currently Sheila is manually controlled from an iPad, but the goal is for her to monitor fields unsupervised.

The plant we are looking for is in our plots, but we have to be there when it is.

We have genomics and supercomputing technologies that enable us to identify thousands of genes and explore the genetic variation in our crops. However, phenotyping, the recording of data on what plants look like, really is a bottleneck that prevents many of the advances in modern plant breeding truly come to the fore.

Furthermore, without technology we could only ever see a minute snapshot of what was going on at any one time, while measuring plants manually has always been time consuming and fraught with human error.

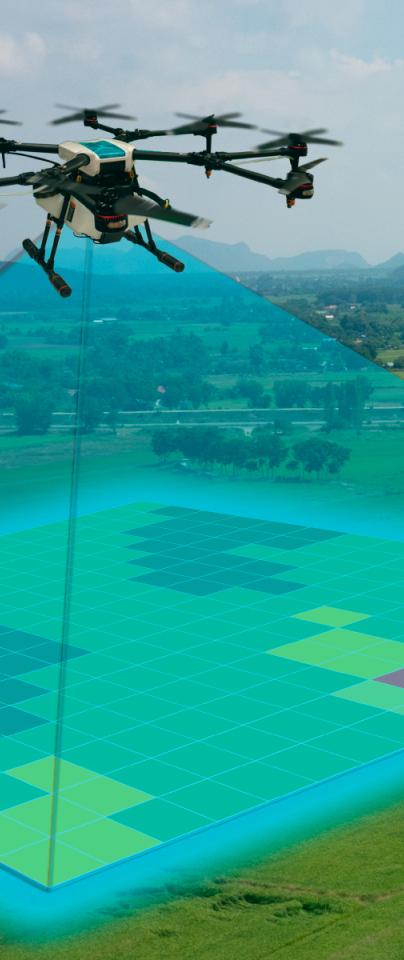

When it comes to removing the phenotyping bottleneck, advancing the automated toolkit with which we can measure plants effectively is the only way forward. The Ji Zhou group is helping to expand not only our capacity for phenotyping but also our capabilities, working on a number of in-field and airborne devices that give us a whole picture of what’s going on in each and every corner of a field.

Taking the measurements, however, is just the beginning. It would be equally tedious, time consuming and inefficient for us to manually sort the data collected by automated means.

That’s why the Zhou group is also working on the machine learning algorithms that help us link phenotype to genotype, putting our supercomputers to work in analysing all of the data that we glean from robots like Sheila.

Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence.

~ Ginni Rometty

A baby learns to crawl, walk and then run. We are in the crawling stage when it comes to applying machine learning.

~ Dave Waters

Of course, machine learning is still in its infancy and there is much development yet to do to hone the algorithms that promise so much. Yet, there are applications of such technology that are already proving to be very useful.

Another project to come out of the Zhou Group has been AirSurf-Lettuce, which was developed along with G’s Growers in Ely and is beautiful in its simplicity of aims: achieving precision harvestability of lettuce.

On top of it being difficult to reliably measure all of the crops in any one field, inclement weather conditions can also throw of harvesting times, such that up to 30% of Britain’s 122,000 tonne annual lettuce crop can be lost in any one year.

AirSurf uses ‘deep learning’ combined with sophisticated image analysis to measure iceberg lettuce crops, identifying the precise quantity and location of lettuce plants, while also recognising the size of the lettuce heads.

Combining this with GPS, farmers are able to track the size distribution of lettuce in fields and guide more precise harvesting, reducing losses and maximising harvest potential.

It's going to be interesting to see how society deals with artificial intelligence, but it will definitely be cool.

~ Colin Angle

We’re a little way off machines taking over the world, and even our jobs (arguably technology has had no net effect on unemployment). However, we are a little closer to precision agriculture thanks to the work of the Ji Zhou Group and others.

Through tying in advances in crop phenotyping with crop research, it might not be that every field has advanced automated devices, but hopefully through more efficient breeding practices we can achieve the next green revolution that will continue to provide abundant food for a global population approaching 10 billion.

If you want to find out more about Sheila and the team, come along to the Norwich Science Festival at the Forum on Friday 25 October, where the Zhoup Group will be showing off their robots and Danny Reynolds will be giving a talk.